Real-time business is a modern phenomenon, and business transformation has accelerated many business events in recent years. However, the execution of business events has always occurred in real time. Rather, it is the processing of the data related to business events that has accelerated instead of the event itself.

Traditionally, the most efficient way to process data has been in batches, potentially hours or even days following an event. As demand for real-time interactive applications grows more pervasive and a greater proportion of data processing is conducted in real-time, streaming data and events become increasingly important to organizations. Reliance on batch data processing is so entrenched that for many data practitioners and business executives, streaming data and events is an alien concept, however.

It does not help that streaming data and events have a language of their own. Phrases such as messaging, event processing, stream processing and streaming analytics are often used interchangeably but have specific meanings, the nuances of which are not necessarily clear to the uninitiated. Without getting too deep into the technical weeds, this post will help those investigating streaming data and events to understand the vocabulary and the role played by different components of streaming data and events.

An ideal starting point is the definition of events, messages and streams. Simply put, an event is a thing that happens. In the context of an application, an event is a change of state, such as a sensor identifying a new temperature reading. Messaging is the sharing of information between applications and systems about events. As an event occurs, the application generates a message about the event that is shared with other applications. Messages contain not only the event data but also metadata related to the application that generated it, the classification of the message (known as a topic), and how and where the message is to be routed. Individual messages can be published sequentially in the form of message queues, while a continuous flow of event messages is a stream (or event stream).

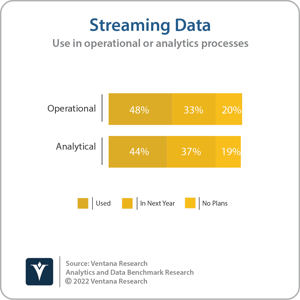

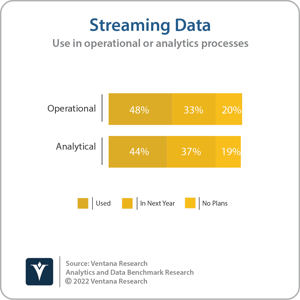

By processing streams of event messages as they are communicated, organizations extract meaningful and actionable information in real time. Ventana Research’s Analytics and Data Benchmark Research indicates that 44% of participants use streaming data in analytics processes. A slightly higher proportion of participants (48%) use streaming data in operational processes. While it is tempting to jump ahead, there is more to understand about event delivery and orchestration in addition to event-driven architecture ‒ precursors to and enablers of stream processing and streaming analytics.

of participants use streaming data in analytics processes. A slightly higher proportion of participants (48%) use streaming data in operational processes. While it is tempting to jump ahead, there is more to understand about event delivery and orchestration in addition to event-driven architecture ‒ precursors to and enablers of stream processing and streaming analytics.

A critical component of messaging is the event broker. This is message-oriented middleware that is responsible for handling the communication of messages between the system or application generating event messages (referred to as a publisher, producer or sometimes an event source) and the application receiving the message (the subscriber, consumer or event sink). Event brokers can often be overlooked as mere plumbing, but they enable communication between applications, cloud services and devices in a distributed architecture without the need to be tightly coupled.

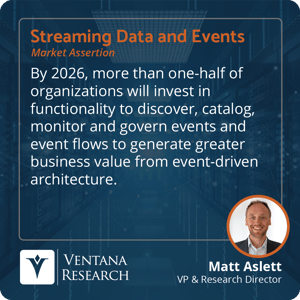

This makes event brokers fundamental enablers of event-driven architecture. EDA is the underlying pattern organizations use to take advantage of events to deliver real-time business processes. It encompasses  publish/subscribe processing of individual events, complex event processing (which involves aggregation of multiple events) and stream processing. The critical components for EDA are a network of event brokers (sometimes known as an event mesh) and event management software for discovering, cataloging, governing and securing events and event-driven applications, including schema and data quality management. I assert that by 2026, more than one-half of organizations will invest in functionality to discover, catalog, monitor and govern events and event flows to generate greater business value from event-driven architecture.

publish/subscribe processing of individual events, complex event processing (which involves aggregation of multiple events) and stream processing. The critical components for EDA are a network of event brokers (sometimes known as an event mesh) and event management software for discovering, cataloging, governing and securing events and event-driven applications, including schema and data quality management. I assert that by 2026, more than one-half of organizations will invest in functionality to discover, catalog, monitor and govern events and event flows to generate greater business value from event-driven architecture.

In addition to operational aspects of managing the delivery and orchestration of events, messages and event streams, event management software provides capabilities for analytic use cases based on extraction of meaningful and actionable information from streams of event messages. Stream processing involves acting on the event data as it is generated and communicated rather than simply communicating messages about events between applications. Stream processing encompasses streaming integration (including aggregation, transformation, enrichment and ingestion) and streaming analytics, which uses streaming compute engines to analyze streams of event data in motion before storing historical event data in an external data platform. More recently, we have seen the emergence of streaming databases designed to provide a single environment for continually processing streams of event data using SQL queries and real-time materialized views and persisting historical event data for further analysis.

I previously wrote about the need for organizations to take a holistic approach to the management and governance of data in motion alongside data at rest. I assert that by 2025, standard information architectures for more than 7 in 10 organizations will include streaming data and event processing, allowing organizations to be more responsive and provide better customer experiences. Organizations that have not invested in streaming data and events risk being left behind. Since steaming analytics delivers actionable insights from data in motion, it can become the center of attention. I recommend that organizations lay the foundation for EDA and stream processing by ensuring the fundamental importance of event brokers and messaging is not overlooked.

Regards,

Matt Aslett

of participants use streaming data in analytics processes. A slightly higher proportion of participants (48%) use streaming data in operational processes. While it is tempting to jump ahead, there is more to understand about event delivery and orchestration in addition to event-driven architecture ‒ precursors to and enablers of stream processing and streaming analytics.

of participants use streaming data in analytics processes. A slightly higher proportion of participants (48%) use streaming data in operational processes. While it is tempting to jump ahead, there is more to understand about event delivery and orchestration in addition to event-driven architecture ‒ precursors to and enablers of stream processing and streaming analytics. publish/subscribe processing of individual events, complex event processing (which involves aggregation of multiple events) and stream processing. The critical components for EDA are a network of event brokers (sometimes known as an event mesh) and event management software for discovering, cataloging, governing and securing events and event-driven applications, including schema and data quality management. I assert that by 2026, more than one-half of organizations will invest in functionality to discover, catalog, monitor and govern events and event flows to generate greater business value from event-driven architecture.

publish/subscribe processing of individual events, complex event processing (which involves aggregation of multiple events) and stream processing. The critical components for EDA are a network of event brokers (sometimes known as an event mesh) and event management software for discovering, cataloging, governing and securing events and event-driven applications, including schema and data quality management. I assert that by 2026, more than one-half of organizations will invest in functionality to discover, catalog, monitor and govern events and event flows to generate greater business value from event-driven architecture.